Seven years ago we received a really nice house warming gift.

It was a Sous Vide Precision Cooker.

A fancy (wi-fi enabled) contraption that would slowly cook the inside of a steak. This boiled the steak to the perfect temperature which then enabled you to sear the outside on a skillet.

I only used it once.

Let’s call a spade a spade. Either the contraption is too fancy. Or I’m too basic.

Nevertheless, the kind gift turned out to be a colossal waste of money.

Is the $200 version of ChatGPT Pro worth it?

I ask myself the same question about some of my AI subscriptions.

Here’s a pie chart of my AI Tool Stack — totaling $285 a month (or ~$4k a year).

The lion’s share of this spending is on the $200 a month on the Pro version (or $2,400 a year), but I have struggled to justify the ROI.

(Except that it enables me to try stuff out and report back to all you awesome readers.)

So I had to get cracking when OpenAI released its long-anticipated o3-pro model which Sam Altman described as:

“Our most intelligent model, o3, designed to think longer and provide the most reliable responses.”

I had to run out and see if finally the ROI was worth it:

TL;DR — not yet. But that’s not OpenAI’s fault, that’s on me.

Start with the party trick

Whenever a new model comes out, I immediately head to Twitter to see how folks are using it.

And usually the first thing you find are neat (but not particularly useful) party tricks.

I started with this prompt:

Where is this?

And attached this picture:

The response was spot on:

The scene matches Manhattan Beach in Los Angeles’ South Bay.

Continuous row of modern, multi‑story beachfront homes rising just behind a relatively narrow strip of sand is characteristic of Manhattan Beach’s “Strand.”

OK, now go solve a real problem

Next up, I wanted to leverage the existing memory and deep context that ChatGPT has about me:

Based on everything that you know about me, what are the highest leverage questions I can ask the o3-pro model exclusively that cannot be answered well by o3 or deep research alone?

First surprise, it thought for 16 minutes.

Yes, I could’ve done an entire meditation session during the time it ran.

This wasn’t totally surprising, given that I predominantly use o3 and Gemini 2.5 Pro — both slower reasoning models which require one-shot prompting (i.e. no back-and-forth chatting).

It gave 7 answers and here were two of the best ones:

Career‑Transition Simulation for Clients

Given a 42‑year‑old PE partner with unvested carry, model three timelines (12 m, 24 m, 36 m) showing financial, psychological, and reputational trade‑offs of leaving now vs. later. Output as a scenario matrix with next‑step checklists.

This integrates finance math, identity psychology, and coaching tactics—real‑time bespoke analysis.

Unique Memoir Structure

Outline a braided‑narrative memoir that interleaves my Cambodian‑French heritage, Wall‑Street exit, and surfing life. Specify scene order, emotional beats, and thematic callbacks in a spreadsheet‑ready table.

Demands story‑architecture expertise merged with your life details—beyond generic memoir templates.

These answers are definitely not worth $200 a month, but it did bring to fore a potential power of the model: making inter-disciplinary connections.

Can this model make me more money?

Let’s get to the brass tacks.

Many professionals (like this $10 Billion hedge fund) want one answer:

What is the ROI of the new model?

And, can this help me do my job better?

In our AI Accelerator (for financial professionals), I offered up “access” to my model and a request on improving business development came back:

Pretend like you run biz dev for an alternative asset manager with multiple funds and XX bn of AUM. You run a team of 50 people who are smart, but not really using LLMs. Give a list of 50 ways, with detailed instructions (1 paragraph each) that LLMs could be used to save the biz time and/or make more money. Start with easy wins and as the list grows, make it the ideas more and more creative. Don't include anything that involves coding or APIs

Here were a few of the results:

#4 First‑pass pitch‑book language.

Feed last quarter’s deck into ChatGPT and instruct: “Update all performance charts verbally (no graphics) and rewrite the narrative to reflect YTD figures in plain English.” Designers then paste the refreshed copy into PowerPoint, slashing drafting cycles.

#33 Cross‑fund upsell matrix:

Upload your full LP roster with invested funds; ask: “For each LP, suggest the logical next product based on mandate and commitments.” Equip relationship managers with data‑backed upsell paths.

#37 Socratic coaching for juniors:

Tell ChatGPT: “Act as a mentor; ask me sequential questions to improve my 2‑page memo on Fund XIX positioning.” They iterate privately and surface stronger work.

This was a pretty solid list (and better than some of the cheaper models), but probably not worth $200/month.

My failure of imagination

Here’s what happens every time a new model comes out.

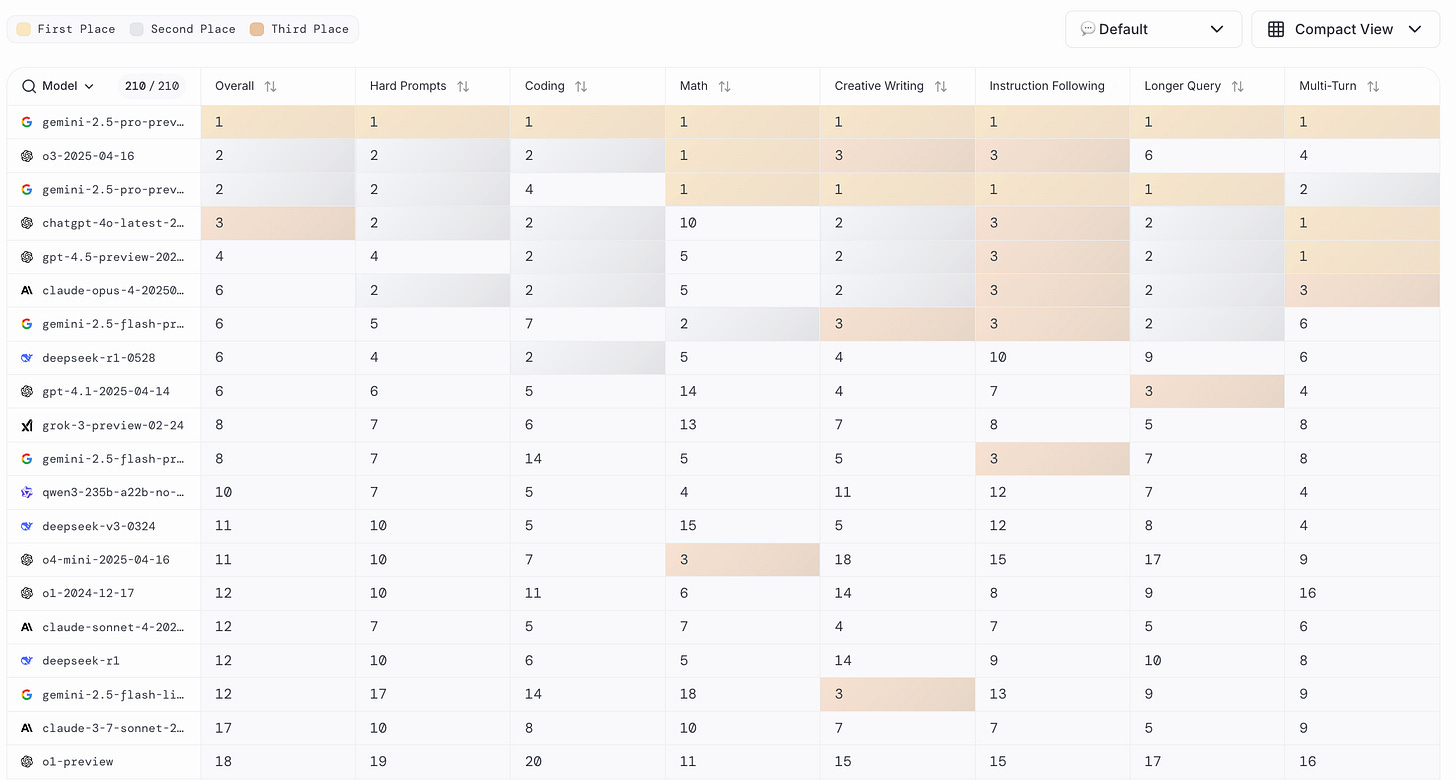

There are a bunch of model competition sites where each model is fed some representative problems in coding, math, image recognition, etc.

This one actually takes place in an “arena” (i.e. LMArena.ai):

You’ll then see a bunch of charts like this:

But here’s my reality — these charts mean nothing to me.

Especially since coding, math and hard sciences are NOT a part of my workflow.

So then what?

The

newsletter describes how hard it is for individuals to differentiate between these powerful new model releases.They knew that the model was “much smarter” but in order to see that they needed to give it “a lot more context.”

But here’s the problem — they were running out of context!

So they took a different approach:

My co-founder Alexis and I took the time to assemble a history of all of our past planning meetings at Raindrop, all of our goals, even record voice memos: and then asked o3-pro to come up with a plan.

We were blown away; it spit out the exact kind of concrete plan and analysis I’ve always wanted an LLM to create — complete with target metrics, timelines, what to prioritize, and strict instructions on what to absolutely cut.

The plan o3 gave us was plausible, reasonable; but the plan o3 Pro gave us was specific and rooted enough that it actually changed how we are thinking about our future.

This is hard to capture in an eval.

I have a lot of context across my writing, transcripts and training materials — but I have yet to aggregate it all in one place and figure out what answer I need from it all.

And this failure of imagination is purely on me.

The fantastic folks at

related to this challenge:The frontier models, o3-pro, Claude Opus 4, Gemini 2.5 Pro, possess sufficient capability that the differences are subtle and often emerge only in the most complex use cases and when we provide ample context. We’ve yet to have a moment similar to what’s described above in our own use of o3-pro, but we don’t believe it’s because the model lacks capability.

The “floor and ceiling” framework

There are dueling forces at play here.

I learned about this concept from my friends at ApplyAI, an AI training and change management firm.

First, you have to raise the floor. This is your baseline AI fluency.

This includes understanding model selection, tools, prompting, context windows, workflows and use cases.

My prediction is that this baseline will be table stakes in the next 6-12 months — akin to applying to a finance job without knowing how to use Excel.

The trickier part is raising the ceiling. This is the higher-order challenge of identifying the true transformative nature of these tools. Maybe it’s agents. Or coding. Or reinventing the workflows of your firm.

For me, the ROI of my $2,400 ChatGPT subscription is quite simple.

It forces me to use the tools.

I have been using o1-pro, o3 and now o3-pro since the day they came out.

o1-pro forced me to understand one-shot prompting, lean more heavily on markdown and start using Obsidian for prompt/context management.

o3 just kicks ass. It is so much more accurate, has “Deep Search lite” features and just seems really really smart. It’s also gotten way faster over the past 3 months.

(I’m blown away by how most people don’t know it even exists.)

Now o3-pro has me thinking — if these models are so good at writing code — how might this become a part of my workflow?

I’ve already started down the vibe coding path (migrating and rebuilding khehy.com from scratch).

But that’s not raising the ceiling enough.

Stay tuned.

I feel this and it’s nice to know you feel this way. There’s an interesting gap between what I use the AI tools for and what I could be using it for but just don’t know what to ask. But sometimes I have to admit I may be hitting the limit of either what it can do or what I can do with it.

I appreciate this honest update. I am still trying to find an answer to what AI is doing with this very specific and personal info on one's life and business. This is more than intellectual curiosity. I am finding AI very helpful to my workflow. But as I 'feed' the LLM more complex problems to solve, I wonder where the line is on privacy and confidentiality. Or am I being naive to think there is any at all?